How do I fix ErrImagePull while pulling pod's images from ACR in AKS?

Azure Kubernetes Infrastructure-as-code Azure-Bicep 💪

Table of contents:

As an engineer I want to pull container images that are part of my pods’ deployments to Azure Kubernetes Service (further - AKS) from private container registry Azure Container Registry (further - ACR). I have created Azure Resource Group (further - RG), AKS and ACR. I have submitted my very first manifest with pod using kubectl, but all I can see is that my pod creation is endlessly pending…

By the way, if you don’t want to read this, you can watch this :)

Check this repository for shortcut of AKS and ACR creation via Azure Bicep.

1

2# Clone the repo

3

4git clone https://github.com/erudinsky/DevOps-Labs

5

6# Review and adjust params in ./src/parameters.json and ./src/provision.sh

7# Set executable permissions on file and run

8

9chmod +x ./src/provision.sh

10./src/provision.sh

provision.sh includes:

1#!/bin/bash

2

3export RESOURCE_GROUP="pardus-rg"

4export LOCATION="westeurope"

5

6echo "Deploying resources to ... "

7echo "Resource group: $RESOURCE_GROUP"

8echo "Location: $LOCATION"

9

10az group create -n $RESOURCE_GROUP -l $LOCATION

11

12echo "Resource group $RESOURCE_GROUP has been created/updated"

13

14az deployment group create \

15 --template-file "./main.bicep" \

16 --parameters "./parameters.json" \

17 --resource-group $RESOURCE_GROUP \

Adding image to ACR #

I am going to authenticate with my private registry and then use nginx:latest as the image to store in the private ACR repository:

1

2az acr login -n ACR_NAME

3docker pull nginx

4docker tag nginx ACR_NAME.azurecr.io/mynginx

5docker push ACR_NAME.azurecr.io/mynginx

We also verify if the image has arrived successfully to our ACR (we can do the same via Azure Portal by going to ACR > Repository).

1

2az acr repository list -n ACR_NAME

3

4[

5 "mynginx"

6]

Creating pods #

I am going to use az aks get-credentials to authenticate with my AKS and then, with kubectl, submit two pods with images from public docker registry and from private ACR:

1

2az aks get-credentials -n AKS_NAME -g RESOURCE_GROUP

3

4kubectl run mynginx1 \

5 --image=nginx \

6 --image-pull-policy=Always \

7 --dry-run=client \

8 -o yaml > mynginx1.yaml

9

10kubectl run mynginx2 \

11 --image=ACR_NAME.azurecr.io/mynginx \

12 --image-pull-policy=Always \

13 --dry-run=client \

14 -o yaml > mynginx2.yaml

15

16kubectl apply -f mynginx1.yaml

17kubectl apply -f mynginx2.yaml

18kubectl get pods

19

20NAME READY STATUS RESTARTS AGE

21mynginx1 1/1 Running 0 14s

22mynginx2 0/1 ErrImagePull 0 2s

With kubectl run I generated two yaml files. Let me dump them here: 1) docker’s hub (pub) nginx; 2) private CR’s image. I am also adding --image-pull-policy=Always in order to pull the image always from the registry, so we won’t bump into cached image layers while doing our further experiments. Note that the default behaviour is to pull in case IfNotPresent (check for further details).

Pod with image from public registry (authentication is not required)

1

2apiVersion: v1

3kind: Pod

4metadata:

5 creationTimestamp: null

6 labels:

7 run: mynginx1

8 name: mynginx1

9spec:

10 containers:

11 - image: nginx

12 imagePullPolicy: Always

13 name: mynginx1

14 resources: {}

15 dnsPolicy: ClusterFirst

16 restartPolicy: Always

17status: {}

Pod with image from private registry (authentication is required)

1

2apiVersion: v1

3kind: Pod

4metadata:

5 creationTimestamp: null

6 labels:

7 run: mynginx2

8 name: mynginx2

9spec:

10 containers:

11 - image: ACR_NAME.azurecr.io/mynginx

12 imagePullPolicy: Always

13 name: mynginx2

14 resources: {}

15 dnsPolicy: ClusterFirst

16 restartPolicy: Always

17status: {}

As we can see the image that we use in pod mynginx2 fails with ErrImagePull. We can explore this error further with kubectl describe pod mynginx2 to see what exactly cause the issue:

1

2kubectl describe pod mynginx2

3ame: mynginx2

4Namespace: default

5Priority: 0

6Node: aks-nodepool1-36348178-vmss000000/10.240.0.4

7Start Time: Sun, 10 Oct 2021 22:04:51 +0200

8Labels: run=mynginx2

9Annotations: <none>

10Status: Pending

11IP: 10.244.0.10

12IPs:

13 IP: 10.244.0.10

14Containers:

15 mynginx2:

16 Container ID:

17 Image: ACR_NAME.azurecr.io/mynginx

18 Image ID:

19 Port: <none>

20 Host Port: <none>

21 State: Waiting

22 Reason: ImagePullBackOff

23 Ready: False

24 Restart Count: 0

25 Environment: <none>

26 Mounts:

27 /var/run/secrets/kubernetes.io/serviceaccount from default-token-7zgfm (ro)

28Conditions:

29 Type Status

30 Initialized True

31 Ready False

32 ContainersReady False

33 PodScheduled True

34Volumes:

35 default-token-7zgfm:

36 Type: Secret (a volume populated by a Secret)

37 SecretName: default-token-7zgfm

38 Optional: false

39QoS Class: BestEffort

40Node-Selectors: <none>

41Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

42 node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

43Events:

44 Type Reason Age From Message

45 ---- ------ ---- ---- -------

46 Normal Scheduled 3m41s default-scheduler Successfully assigned default/mynginx2 to aks-nodepool1-36348178-vmss000000

47 Normal Pulling 2m6s (x4 over 3m40s) kubelet Pulling image "ACR_NAME.azurecr.io/mynginx"

48 Warning Failed 2m6s (x4 over 3m40s) kubelet Failed to pull image "ACR_NAME.azurecr.io/mynginx": rpc error: code = Unknown desc = failed to pull and unpack image "ACR_NAME.azurecr.io/mynginx:latest": failed to resolve reference "ACR_NAME.azurecr.io/mynginx:latest": failed to authorize: failed to fetch anonymous token: unexpected status: 401 Unauthorized

49 Warning Failed 2m6s (x4 over 3m40s) kubelet Error: ErrImagePull

50 Normal BackOff 114s (x6 over 3m40s) kubelet Back-off pulling image "ACR_NAME.azurecr.io/mynginx"

51 Warning Failed 99s (x7 over 3m40s) kubelet Error: ImagePullBackOff

There you go Failed to pull image "ACR_NAME.azurecr.io/mynginx": rpc error: code = Unknown desc = failed to pull and unpack image "ACR_NAME.azurecr.io/mynginx:latest": failed to resolve reference "ACR_NAME.azurecr.io/mynginx:latest": failed to authorize: failed to fetch anonymous token: unexpected status: 401 Unauthorized and this simply means that having AKS and ACR in the same tenant and subscription is not enough to have images pulled from registry to the cluster. Let’s fix the issue.

Enabling anonymous pull access (option #1) #

For development purposes I can add anonymous public pull access to ACR. Unfortunately it is only supported with Standard SKU, so I am going to upgrade my ACR and set it’s SKU first.

Keep in mind, this makes your ACR image available for anyone in the World since it allows anonymous pulls! I’d say consider such configuration if you maintain public registry (like docker hub) or in case you stuck with troubleshooting and want exclude some silly mis-configuration issues.

1

2az acr update -n ACR_NAME --sku Standard

3az acr update -n ACR_NAME --anonymous-pull-enabled

Since pulls are anonymous now, the control plane will try to pull the image again for pod mynginx2 and it should be successful this time.

Let’s disable anonymous pull access and set SKU back to basic. Let’s also delete pod mynginx2 so we can repeat the experiment with other options.

1

2az acr update -n ACR_NAME --anonymous-pull-enabled false

3az acr update -n ACR_NAME --sku Basic

4kubectl delete pod mynginx2

5pod "mynginx2" deleted

Azure AD Managed Identity (option #2) #

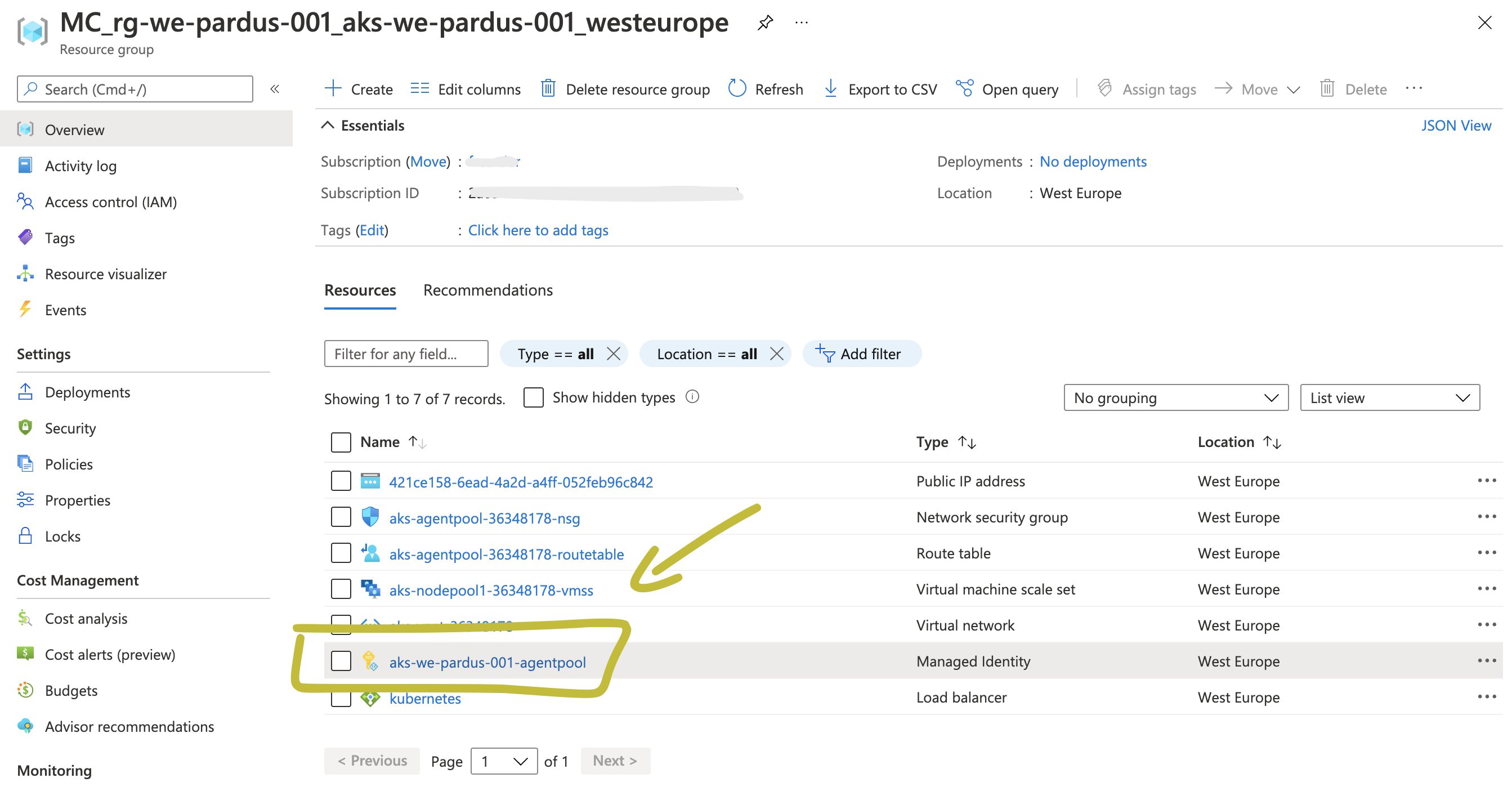

If we examine the cluster via Portal (probably the easiest way of doing this), we can see that Managed Identity has been also created for us (while creating AKS cluster). Managed identity simplifies the work of cloud resources, so we don’t have to think about authentication and storing credentials, we can define who, to whom and how we can trust.

That managed identity doesn’t have any assignment yet, but we can define it with the following command and try to create a pod and see if the ACR image is pullable. Keep in mind the following command requires Owner, Azure account administrator, or Azure co-administrator role on the Azure subscription.

1

2az aks update -n AKS_NAME -g RESOURCE_GROUP --attach-acr ACR_NAME

3kubectl apply -f mynginx2.yaml

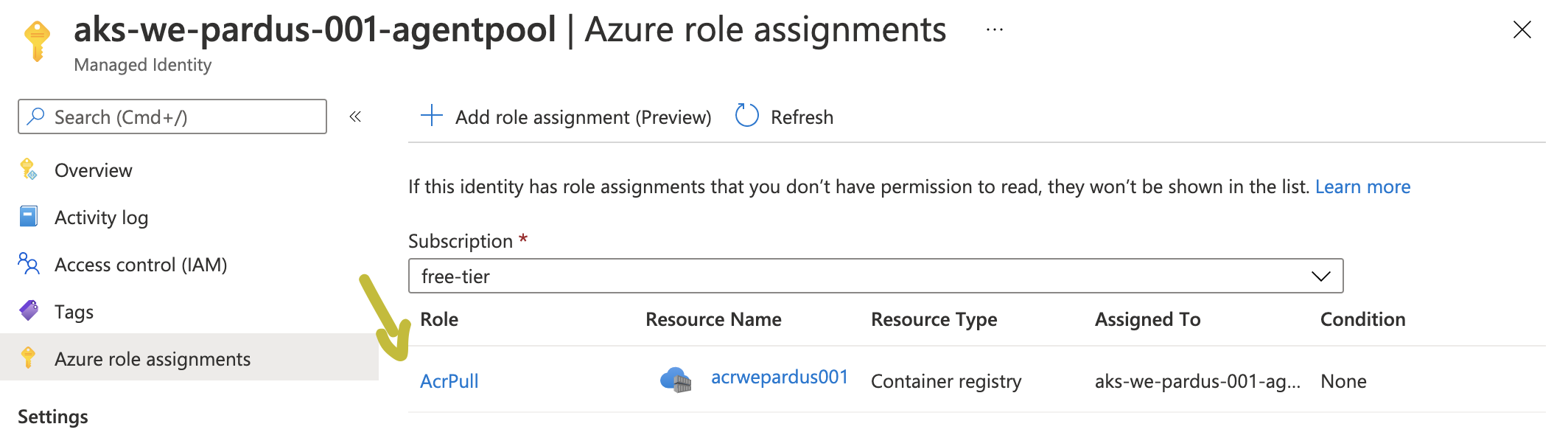

And also if we examine this Managed Identity a bit further by checkin Azure AD > Enterprise Application we can see that managed identity is a special type of service principal and by using above command we assigned AcrPull role to it and binded it to our ACR resource. Check via the portal by going to MC_RESOURCE_GROUP_AKS_NAME_westeurope > AKS_NAME-agentpool > Azure role assignments.

So kubectl get pods should give us successful pull.

1

2NAME READY STATUS RESTARTS AGE

3mynginx1 1/1 Running 0 66m

4mynginx2 1/1 Running 0 47s

I am going to delete the image and detach the integration so we can explore some further options:

1

2az aks update -n AKS_NAME -g RESOURCE_GROUP --detach-acr ACR_NAME

3kubectl delete pod mynginx2

Kubernetes Secret (option #3) #

We create Kubernetes Secret of type docker-registryand make a reference in our pod by using imagePullSecretproperty explicitly! Let’s generate secret out of access keys.

1

2ACR_NAME=ACR_NAME.azurecr.io

3

4az acr update -n $ACR_NAME --admin-enabled true

5

6ACR_USERNAME=$(az acr credential show -n $ACR_NAME --query="username" -o tsv)

7ACR_PASSWORD=$(az acr credential show -n $ACR_NAME --query="passwords[0].value" -o tsv)

8

9kubectl create secret docker-registry mysecret \

10 --docker-server=$ACR_NAME \

11 --docker-username=$ACR_USERNAME \

12 --docker-password=$ACR_PASSWORD \

13 --docker-email=$EMAIL \

14 --dry-run=client \

15 -o yaml > mysecret.yaml

Now if we check mysecret.yaml we can see that it contains a base64 encoded string in data > .dockerconfigjson. There are some other options to create secrets out of other credentials. Check. I am going to generate a new version of pod manifest with mysecret reference in it. Then create this pod on my cluster.

1

2kubectl run mynginx2 \

3 --image=ACR_NAME.azurecr.io/mynginx \

4 --image-pull-policy=Always \

5 --overrides='{ "spec": { "imagePullSecrets": [{"name": "mysecret"}] } }' \

6 --dry-run=client \

7 -o yaml > mynginx2.yaml

8

9kubectl apply -f mynginx2.yaml

10kubectl get pods

11NAME READY STATUS RESTARTS AGE

12mynginx1 1/1 Running 0 120m

13mynginx2 1/1 Running 0 93s

Great, the secret works.

Don’t use this option in production, consider to use Managed Identity and let Azure care about trust between the two services.

Okay, these were 3 different options to authenticate and pull images from ACR to AKS cluster. There are list of available authentication options that I encourage to review in order to learn more about service principal, AKS cluster service principal, various options with managed identity and repository-scoped access token.